Understanding Open Source in AI: A New Frontier

Pruna AI, a burgeoning startup based in Europe, is making strides in the artificial intelligence landscape by open-sourcing its AI model optimization framework. This initiative, which is revealing the complex yet crucial methods of model compression, is not just a technological advancement; it presents a significant democratization of AI tools that can empower developers globally.

What is Model Compression and Why Does It Matter?

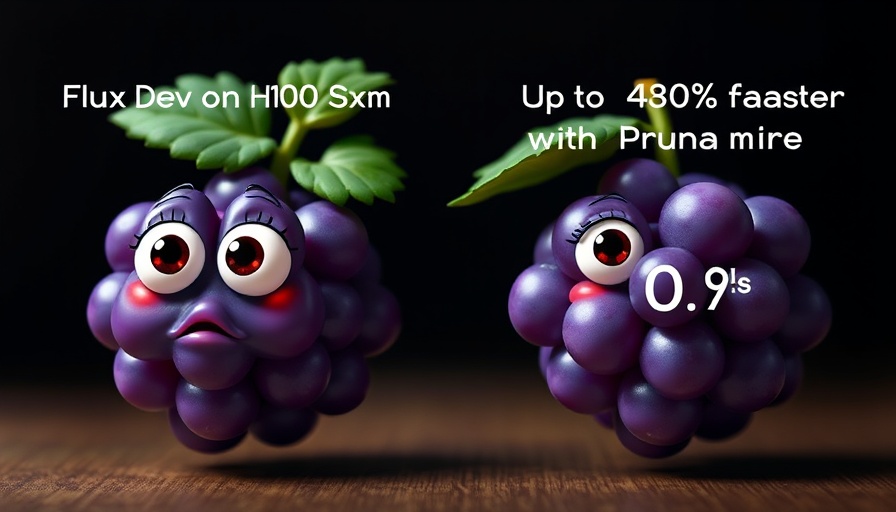

Model compression refers to various techniques used to reduce the size of AI models without significantly sacrificing their accuracy. Pruna AI's innovative framework incorporates methods such as caching, pruning, quantization, and distillation. Each technique offers unique benefits. For example, distillation mimics a teacher-student model where a smaller, “student” model learns to emulate the performance of a larger, more complex “teacher” model, making it faster and easier to deploy without compromising much on quality.

This has practical consequences; businesses can reduce their costs and increase efficiency by optimizing AI models to run faster while using less computational power, which is essential for applications ranging from large language models (LLMs) to sophisticated image generation.

Pruna AI’s Unique Offerings: A Comprehensive Solution

Unlike existing tools that specialize in single methods of model compression, Pruna AI aggregates multiple techniques into a cohesive framework. Co-founder and CTO John Rachwan explained that while large AI labs like OpenAI have traditionally built these solutions in-house, Pruna AI presents a valuable alternative by allowing developers to adopt and customize various compression strategies seamlessly.

This operational flexibility is a game changer. For instance, users can specify their desired balance between speed and accuracy. The upcoming compression agent from Pruna AI aims to automatically determine the best parameters for model optimization, significantly lowering the barrier for developers who may not have the technical know-how to manually adjust these settings.

The Importance of Open Source in AI Innovation

By open-sourcing its framework, Pruna AI is following a trend that fosters collaboration and innovation. Open-source solutions encourage community engagement, enabling developers from various backgrounds to share insights and improvements. This creates an ecosystem where advancements can happen more rapidly than in siloed environments.

Moreover, open-source frameworks ensure transparency and trust in AI applications, an important facet as concerns over bias and ethical implications in AI technologies continue to rise.

Real-World Applications and User Adoption

Companies like Scenario and PhotoRoom have already adopted Pruna AI’s framework to enhance their functionalities. Such collaborations demonstrate the framework’s versatility and potential in the industry. The broad applicability spans diverse fields, allowing for advancements not only in image and video generation but also in speech recognition and other machine learning scenarios.

For instance, in industries like e-commerce and digital marketing, optimized AI models can lead to better customer interactions and more personalized user experiences without incurring the high computational costs typically associated with powerful AI tools.

Future Trends: The Evolution of AI Model Optimization

Looking ahead, the future of AI model optimization is poised for substantial evolution. With increasing demands for efficiency and performance in AI applications, tools like those offered by Pruna AI will undoubtedly play a pivotal role. The anticipated compression agent will promise significant enhancements, making high-quality AI technology accessible to even smaller players in the market.

This democratization of AI resources may lead to a surge in innovation, where startups and individual developers can leverage powerful AI tools without the need for substantial investment in infrastructure.

What This Means for Developers and Businesses

For developers and businesses, Pruna AI's open-source framework represents a significant opportunity to leverage advanced AI capabilities at reduced costs. The potential for optimized models translates directly into savings on inference costs associated with cloud services. As Rachwan noted, treating model optimization akin to renting GPU time can help businesses significantly reduce operational expenses while boosting the performance of their AI applications.

As the landscape of artificial intelligence continues to evolve, innovations like those from Pruna AI will be crucial in shaping the future. Delving into this new development is not just an opportunity; it’s an essential step for anyone serious about harnessing the potential of AI in their ventures.

Add Row

Add Row  Add

Add

Write A Comment